The typical approach to global illumination rendering using ray tracing is to trace rays from the camera and gather radiance from the light sources. This approach is used to solve the

light transport equation (a.k.a. the

rendering equation). This is usually what the term "path tracing" refers to. Here's the formulation of the light transport equation that Veach uses in his thesis (see chapter 3, specifically page 90):

\[ L_{\text{o}}(\mathbf{x},\, \omega_{\text{o}}) \,=\, L_e(\mathbf{x},\, \omega_{\text{o}}) \ +\, \int_{\mathcal{S}^2} L_{\text{o}}(\mathbf{x}_\mathcal{M}(\mathbf{x},\, \omega_{\text{i}}),\, -\omega_{\text{i}}) \, f_s(\mathbf{x},\, \omega_{\text{i}} \rightarrow \omega_{\text{o}}) \, d \sigma_{\mathbf{x}}^{\perp} (\omega_{\text{i}}) \]

It's also possible to trace rays from the light sources and gather importance from the camera. This approach is used to solve the importance transport equation. This approach is sometimes called "light tracing". Here's the formulation of the importance transport equation that Veach uses in his thesis (see chapter 3, specifically page 91):

\[ W_{\text{o}}(\mathbf{x},\, \omega_{\text{o}}) \,=\, W_e(\mathbf{x},\, \omega_{\text{o}}) \ +\, \int_{\mathcal{S}^2} W_{\text{o}}(\mathbf{x}_\mathcal{M}(\mathbf{x},\, \omega_{\text{i}}),\, -\omega_{\text{i}}) \, f_s(\mathbf{x},\, \omega_{\text{o}} \rightarrow \omega_{\text{i}}) \, d \sigma_{\mathbf{x}}^{\perp} (\omega_{\text{i}}) \]

Solving the importance transport equation relies on the ability to map a point and direction on the camera's lens/aperture/sensor to a point on the image plane, and the ability to evaluate the emitted importance for a given point and direction. Evaluating the emitted importance is a little tricky because it is not constant across the image plane—it is necessary (at least in my formulation) to take into account factors like the area of the aperture, the angle at which the light passes through the aperture, the distance from the aperture to the image plane, the angle at which the light hits the image plane, and the area of the image plane.

For many typical scenes light tracing is very slow compared to path tracing from the camera, however it is much faster for rendering certain types of transport paths (e.g., caustics). Bidirectional path tracing is a generalization of both of these techniques that forms paths based on all of the possible combinations of two subpaths: one traced from the light and one traced from the sensor/eye.

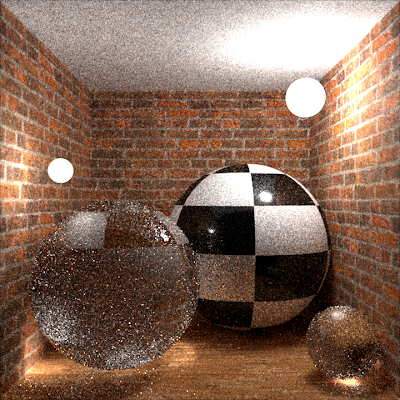

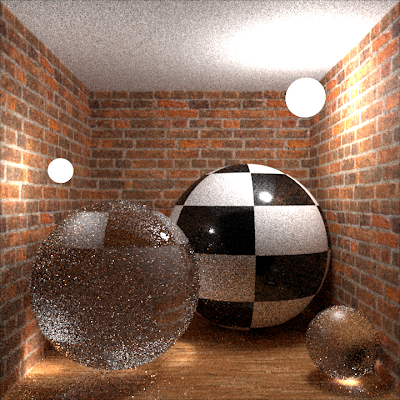

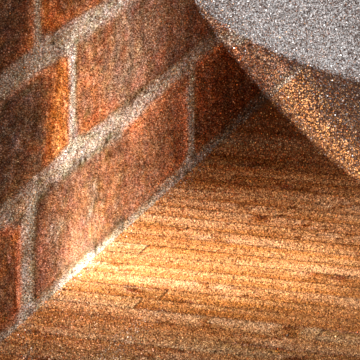

Bidirectional path tracing uses many parts of light tracing (e.g., originating rays from light sources, evaluating the importance emitted from sensors), so I decided to implement light tracing before full bidirectional path tracing. I rendered one of the images below using light tracing, and the second with path tracing. I rendered the light tracing version in my new renderer (which is a revamped version of

Photorealizer), and the path tracing version in Photorealizer. These images contain only paths of length 2, and they are identical (apart from the noise) as they should be.

|

| Light tracing (starting on the light and gathering importance) |

|

| Path tracing (starting on the sensor and gathering radiance) |